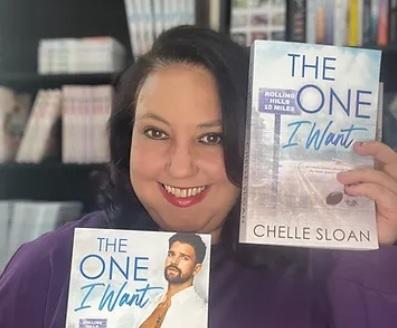

The 2023 national theme for Women's History Month is "Women Who Tell Our Stories," which aims to recognize women who have made an impact in media and storytelling.

The Scripps College of Communication produces some of the world's best storytellers. Throughout Women's History Month, we want to celebrate OHIO alumnae who are using their Scripps' experience in the field, and doing the work to share stories through their professional mediums.